Apple has unveiled an innovative feature in iOS 18 and iPadOS 18 known as Eye Tracking. This cutting-edge technology allows users to navigate and control their iPhones and iPads using just their eye movements, marking a significant advancement in device interaction.

While Eye Tracking might remind some Android users of functionalities available on devices from brands like Oppo and Realme Air Gesture, Apple’s approach is distinctly more impactful.

Unlike the mere gesture controls found on some Android smartphones, Apple’s Eye Tracking is meticulously designed to aid users with physical disabilities. This feature empowers individuals to operate their iPads or iPhones seamlessly through their gaze, enhancing accessibility and user experience.

Tim Cook, CEO of Apple, emphasized the company’s dedication to accessibility and inclusive design. “We believe deeply in the transformative power of innovation to enrich lives,” Cook stated. “For nearly 40 years, Apple has championed inclusive design by embedding accessibility at the core of our hardware and software. We’re continuously pushing the boundaries of technology, and these new features reflect our long-standing commitment to delivering the best possible experience to all of our users.”

Sarah Herrlinger, Apple’s Senior Director of Global Accessibility Policy and Initiatives, echoed this sentiment. “Each year, we break new ground when it comes to accessibility. These new features will make an impact in the lives of a wide range of users, providing new ways to communicate, control their devices, and move through the world,” she added.

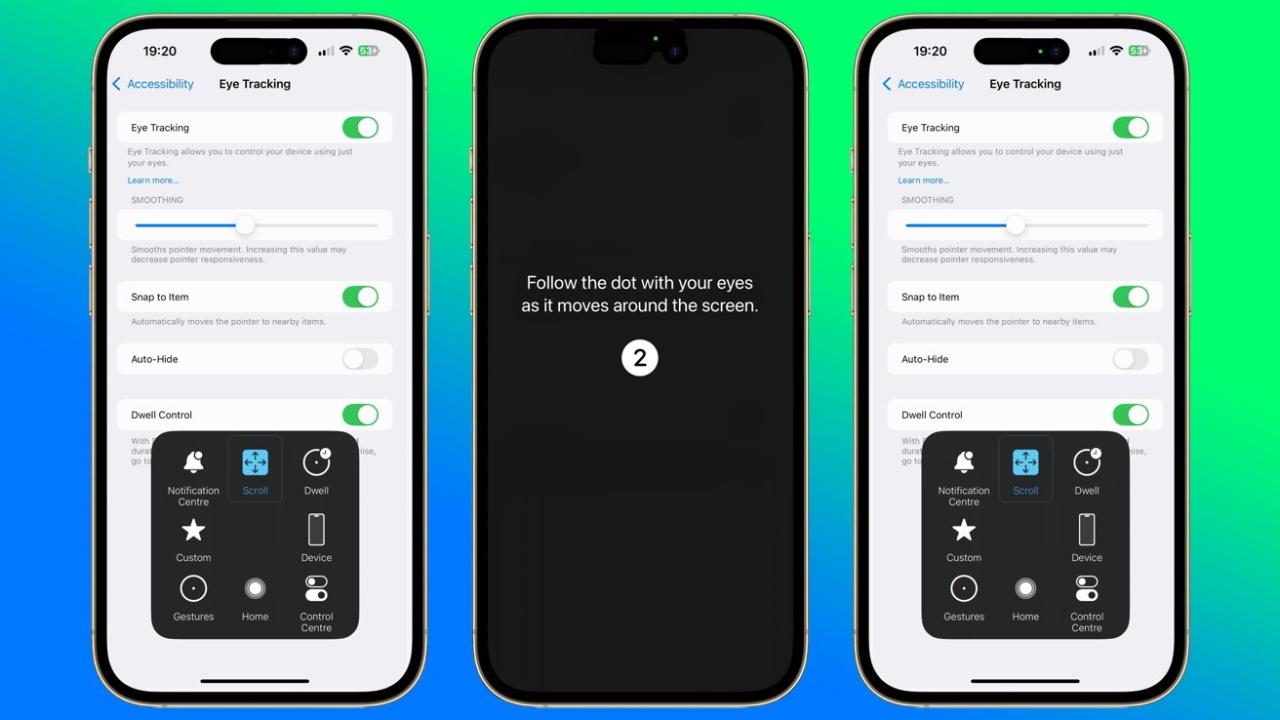

How Eye Tracking Works in iOS 18 and iPadOS 18

Eye Tracking in iOS 18 and iPadOS 18 leverages artificial intelligence (AI) to offer a built-in option for navigating devices using eye movements alone. Here’s a detailed look at how this feature operates:

A. Detection and Calibration

- Selfie Camera Utilization: The feature utilizes the device’s selfie camera to accurately detect eye movements. This ensures precise tracking without the need for additional hardware.

- Quick Calibration: The calibration process is swift, taking only a few seconds. Users follow colored dots that appear on the screen to calibrate the system to their specific eye movements.

- Machine Learning Enhancements: The capabilities of Eye Tracking are continually refined through built-in machine learning, ensuring improved accuracy and responsiveness over time.

B. Privacy and Security

- Local Data Storage: All user settings and calibration data are securely stored on the device itself. Apple ensures that this sensitive information is neither shared with Apple nor any third-party entities.

- Recalibration Requirement: For enhanced security, users must recalibrate their gaze each time they turn the Eye Tracking feature off and on. This adds an extra layer of protection for user data.

C. Dwell Control Functionality

- Button Activation: Eye Tracking allows users to press buttons or activate features through Dwell Control. By simply directing their gaze at a specific app or screen area for a set duration, the system can automatically execute actions.

- Seamless App Integration: This functionality works seamlessly across all apps running on iPadOS and iOS, eliminating the need for additional software or hardware.

- Comprehensive Navigation: Users can navigate through app elements and utilize functions like physical buttons, swipes, and other gestures solely with their eyes, enhancing overall device usability.

Supported Devices

Eye Tracking is available on a range of devices running iOS 18 and iPadOS 18. Here is a comprehensive list of compatible devices:

iPhones:

- iPhone SE 3

- iPhone 12

- iPhone 12 Mini

- iPhone 12 Pro

- iPhone 12 Pro Max

- iPhone 13

- iPhone 13 Mini

- iPhone 13 Pro

- iPhone 13 Pro Max

- iPhone 14

- iPhone 14 Plus

- iPhone 14 Pro

- iPhone 14 Pro Max

- iPhone 15

- iPhone 15 Plus

- iPhone 15 Pro

- iPhone 15 Pro Max

- iPhone 16

- iPhone 16 Plus

- iPhone 16 Pro

- iPhone 16 Pro Max

iPads:

- iPad Mini (6th generation)

- iPad (10th generation)

- iPad Air (4th generation and above)

- iPad Air M2

- iPad Pro 11-inch (3rd generation and above)

- iPad Pro 11 M4

- iPad Pro 12.9-inch (3rd generation and above)

Activating Eye Tracking on Your iPhone

Activating Eye Tracking on your iPhone is straightforward. Follow these steps to enable and calibrate the feature:

A. Accessing Settings

- Open the Settings app on your iPhone.

- Navigate to Accessibility.

B. Enabling Eye Tracking 3. Select Eye Tracking from the accessibility options. 4. Toggle the Eye Tracking feature to On.

C. Calibrating Your Gaze 5. Follow the on-screen instructions to calibrate your eye movements. You will be prompted to focus on colored dots that appear on the screen to ensure accurate tracking. 6. Once calibration is complete, Eye Tracking will be ready for use, allowing you to control your iPhone through your eyes.

Practical Applications of Eye Tracking

Eye Tracking opens up a multitude of possibilities for users, particularly those with physical disabilities. Here are some practical applications:

A. Enhanced Accessibility

- Independent Device Operation: Users with limited mobility can navigate their devices independently, accessing apps, sending messages, and performing tasks without physical interaction.

- Customized Control: The ability to control device functions through eye movements allows for a highly personalized user experience, tailored to individual needs.

B. Improved User Experience

- Hands-Free Navigation: Eye Tracking offers a hands-free way to interact with devices, which can be particularly useful in situations where hands-free operation is preferred or necessary.

- Seamless Integration: The feature integrates smoothly with existing iOS and iPadOS functionalities, ensuring a consistent and intuitive user experience across all apps and services.

C. Future Innovations

- Enhanced AI Capabilities: As Apple continues to develop Eye Tracking, future updates may introduce even more sophisticated AI-driven features, further enhancing device interactivity and usability.

- Broader Accessibility Features: Eye Tracking could pave the way for additional accessibility innovations, setting new standards for inclusive technology design.

Comparing Eye Tracking with Other Technologies

While Eye Tracking shares similarities with other gesture-based control systems, it offers distinct advantages:

A. Precision and Accuracy

- Eye Tracking provides more precise control compared to general gesture recognition, allowing for specific actions based on subtle eye movements.

B. Integration with AI

- The use of AI and machine learning enhances the feature’s adaptability and accuracy, making it more reliable and responsive over time.

C. Comprehensive Device Compatibility

- Unlike some gesture control systems that require specific hardware, Eye Tracking works seamlessly across a wide range of Apple devices without additional accessories.

User Privacy and Data Security

Apple places a strong emphasis on user privacy and data security, particularly with features like Eye Tracking:

A. On-Device Processing

- All Eye Tracking data is processed locally on the device, ensuring that sensitive information remains private and secure.

B. No Third-Party Sharing

- Apple does not share Eye Tracking data with third parties, maintaining user confidentiality and trust.

C. Secure Calibration Data

- The calibration data required for Eye Tracking is stored securely on the device and must be recalibrated each time the feature is toggled, preventing unauthorized access.

Potential Challenges and Considerations

While Eye Tracking offers numerous benefits, there are some challenges and considerations to keep in mind:

A. Initial Calibration

- Users may need to invest a few moments in the initial calibration process to ensure optimal accuracy and responsiveness.

B. Device Compatibility

- Not all Apple devices support Eye Tracking, so users should verify compatibility before expecting to utilize the feature.

C. Learning Curve

- New users may require some time to become accustomed to controlling their devices through eye movements, although the intuitive design minimizes this hurdle.

Future Prospects of Eye Tracking in Apple Ecosystem

Looking ahead, Eye Tracking is poised to become an integral part of Apple’s accessibility and user interface strategy:

A. Enhanced Accessibility Tools

- Apple may expand Eye Tracking capabilities to offer even more comprehensive accessibility tools, further empowering users with diverse needs.

B. Broader Application Integration

- As developers become more familiar with Eye Tracking, we can expect deeper integration of this feature across a wider array of apps and services, enhancing overall functionality.

C. Continuous AI Improvements

- Ongoing advancements in AI and machine learning will likely lead to continual improvements in Eye Tracking accuracy and capabilities, ensuring the feature remains cutting-edge.

Conclusion

Apple’s introduction of Eye Tracking in iOS 18 and iPadOS 18 represents a significant leap forward in device accessibility and user interaction. By leveraging advanced AI and machine learning, Eye Tracking offers a precise, secure, and user-friendly way to control iPhones and iPads using just eye movements. This feature not only enhances the user experience for those with physical disabilities but also sets a new standard for inclusive technology design.

Embracing Eye Tracking is a testament to Apple’s unwavering commitment to accessibility and innovation, ensuring that technology remains a powerful and inclusive tool for everyone.